Where should AI live?

Drones, robots, and other autonomous things

We’re standing at a crossroads in AI deployment, where the choice between running AI on-device or pushing workloads off to infrastructure (cloud or on-prem) has never been more nuanced.

Especially for drones and robotics.

On one path, we’ve got heavy cloud or server-side compute: big models, huge datasets, limitless scalable power. The other path winds through edge compute: smaller hardware, real-time processing, power constraints, data locality. Today’s trend is more of a spectrum than a binary choice. Decisions depend on latency needs, power budgets, sensor bandwidth, autonomy needs, and overall system criticality.

Let’s peel back the layers and see how folks are mapping this terrain today, and where it’s headed.

Compute tradeoffs: On-device vs offloading

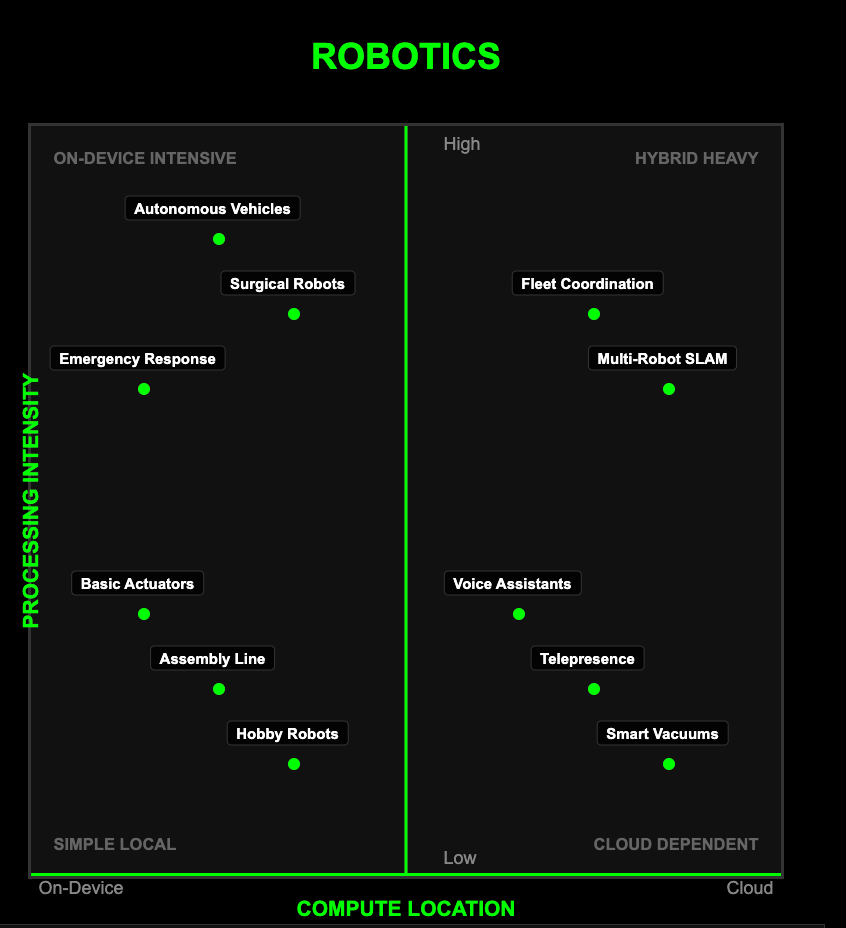

Robotics

The overarching story is simple: robots that work in factories, hospitals, or warehouses need to make decisions instantly. If they waited for data to travel to the cloud and back, they'd be too slow to be safe or useful. That's why the entire field keeps moving toward doing everything right on the machine itself. Bottom line for robotics: the future bends strongly toward all-in-one autonomy.

Simple Local (Bottom Left): Small robots and hobby projects occupy this space, using affordable boards like the Jetson Nano. These systems handle basic vision and control tasks with minimal computational overhead, perfect for educational robotics and simple automation where processing demands are light but real-time response is still needed.

On-Device Intensive (Top Left): This is where the robotics revolution is happening. Advanced robots like humanoids and warehouse bots now run powerful chips like Jetson Thor, capable of handling multiple large AI models simultaneously right on the robot. These systems can see, plan, and act in real time without any external dependency—exactly what's needed for safety-critical applications in factories, hospitals, and warehouses where even milliseconds of network latency could mean disaster.

Hybrid Heavy (Top Right): Some sophisticated tools like VPEngine or cuVSLAM help distribute workloads more efficiently across a robot's sensors and processors. However, these systems are still fundamentally designed to keep the robot independent of cloud connectivity. They represent optimization of on-device compute rather than true cloud dependence.

Cloud Dependent (Bottom Right): This quadrant is shrinking rapidly. Legacy systems and some specialized coordination tasks still require network connectivity, but the trend is clear - as edge compute becomes more powerful and affordable, fewer robotics applications justify the latency and reliability risks of cloud dependence.

People in fleet management or industrial coordination will push back: factories and warehouses often prefer centralized orchestration for efficiency and safety oversight. But it’s not binary: control loops local, coordination global. Autonomy can coexist with centralized oversight by layering decision scopes.

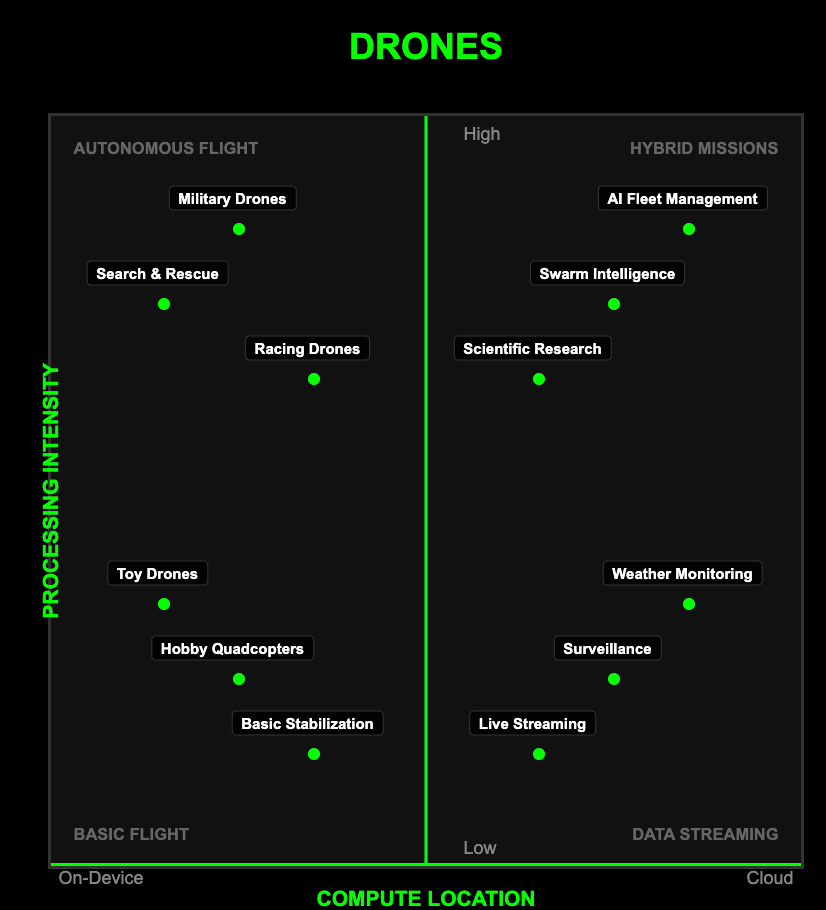

Drones

Drones face a unique constraint that ground robots don't: they're literally fighting gravity every second they're airborne. This creates an interesting tension: you need enough onboard smarts to stay in the air safely, but every gram of compute hardware reduces flight time. The result is a field that's much more willing to use hybrid approaches than robotics, splitting critical flight functions (always local) from mission intelligence (often remote). The future of drones isn't usually total autonomy, like robots. The focus is on smart specialization of what processing happens where.

Basic Flight (Bottom Left): Hobby quadcopters and toy drones live here, running simple flight stabilization on basic microcontrollers. These systems focus purely on keeping the aircraft airborne and responsive to pilot commands - no fancy AI, just reliable physics and control loops that work every time you power up.

Autonomous Flight (Top Left): This is where drones get serious. Military drones, search and rescue aircraft, and racing drones operate in environments where connectivity is unreliable or nonexistent. They pack serious computational power onboard to handle real-time navigation, obstacle avoidance, and mission execution completely independently. When you're flying into a disaster zone or through a GPS-jammed battlefield, the drone has to be smart enough to figure everything out on its own.

Hybrid Missions (Top Right): Advanced commercial applications like swarm intelligence and AI fleet management split their brains between sky and ground. The drone handles immediate flight safety and basic navigation locally, while coordinating with ground systems for complex mission planning, traffic management, and collaborative behaviors. Think delivery drones that need to navigate local obstacles instantly but optimize routes through cloud-based traffic systems.

Data Streaming (Bottom Right): Surveillance drones, weather monitoring platforms, and live streaming aircraft are essentially flying sensors. They keep flight control simple and local while beaming everything they see back to ground-based systems for analysis. The real intelligence happens on the ground - the drone is just a sophisticated data collection platform with wings.

Nvidia and the rise of Physical AI

On-device inference is critical wherever split-second decision-making is required, especially in dynamic physical environments. Latency must be near-zero, and cloud outages or network hiccups aren’t an option.

One big milestone: NVIDIA’s Jetson AGX Thor. This is key evidence of the hardware shift toward real-time physical AI. Launched just last month, early adopters are big names: Agility Robotics, Amazon Robotics, Boston Dynamics, Caterpillar, Figure, Meta, plus evaluations ongoing at John Deere and OpenAI.

What this means is that robots no longer have to rely on cloud-hosted models to imagine, plan, and act. They can run multiple generative AI models—including vision-language and reasoning models—right on board, ingesting sensor streams and making intelligent decisions without network lag. NVIDIA here is defining “physical AI” as enabling agents that perceive, reason, and act in real time.

In systems like humanoid robots (e.g., Figure’s GR00T robots or Boston Dynamics’ Atlas) or logistics bots (Agility Robotics’ Digit), real-time adaptivity is paramount. You can’t wait for cloud inference when stability or safety depends on immediate response. Jetson Thor’s performance leap (7.5× the AI compute and 3.5× energy efficiency over Jetson Orin) is a defining shift.

Thor also supports massive multi-sensor ingestion and real-time fusion via Holoscan and Isaac stacks, processing cameras, lidar, and other data pipelines effortlessly on-device. The result? Robots that look, think, and move like agents, rather than tele-operated or latency-bound machines.

We’re watching a quiet revolution. To decide, moment by moment, what belongs local and what belongs distributed? That flexibility changes everything.

Offloading to the cloud still has its place

But not every system is latency-sensitive, and not every application can afford heavy on-device compute. Consumer drones, for example, often have constrained payload, limited power, and tight cost budgets.

This is where startups like Drone Forge come in. Drone Forge is building architecture and services to manage fleets of drones—including perhaps the ability to offload compute to land-based systems—effectively decoupling heavy AI from drone hardware. The core value proposition: if your drone isn't burdened with heavy models, you can buy cheaper, lighter drones, yet still deliver “cool AI” via remote inference or aggregated fleet compute. It’s a model well suited for tasks where real-time isn’t critical, or short link reliability is guaranteed.

Even where offloading makes sense, sensor processing nearly always happens on-device. Raw image, lidar, radar, IMU data needs to be captured, pre-processed, maybe compressed, before even considering remote inference. But the heavy lifting can safely reside off board, preserving drone agility and lowering costs.

What’s Changing the Game in 2025

We’re watching a quiet revolution. For years, people argued: should intelligence live on the machine, or should it sit in the cloud? In 2025, that argument feels outdated. The real breakthrough isn’t picking one path. It’s the ability to design across the spectrum. To decide, moment by moment, what belongs local and what belongs distributed. That flexibility changes everything.

From Single Agents to Coordinated Systems

For years, the benchmark was whether a single robot could perform a task. Now, the focus is shifting toward coordination: how multiple machines share intelligence, balance workloads, and adapt as a group. That matters because autonomy at scale—whether in a warehouse, a battlefield, or a city—depends on cooperation. Recent work like RoboBallet, where a cluster of robotic arms performed complex tasks in sync, shows what becomes possible once coordination is treated as the first-class problem rather than an afterthought.

From Recognition to Reasoning

Recognition (spotting an object, classifying an image) was enough for first-generation robots and drones. But edge systems in 2025 are being judged on their ability to reason. Can they fold a shirt they’ve never seen before? Plan a route when a warehouse layout changes? Respond safely when conditions shift mid-flight? Models like DeepMind’s Gemini Robotics highlight this evolution, moving robots closer to the kind of flexible reasoning that makes autonomy practical in unstructured environments.

From Vertical Stacks to Open Ecosystems

Another important change is architectural. Hardware is no longer a bottleneck controlled by a few suppliers. New chipmakers (Axelera in Europe, Horizon Robotics in China) are offering alternatives tuned for vision and embodied AI, while alliances like South Korea’s K-Humanoid are pooling resources across academia and industry. That diversification matters because it lowers costs, reduces geopolitical risk, and accelerates experimentation. Instead of one company dictating the roadmap, we’re seeing an ecosystem of players shaping the edge AI stack.

From Hype to Market Reality

Finally, the economics are catching up. Edge AI is no longer a niche: analysts expect the market to quadruple over the next five years as industries adopt real-time intelligence at scale. For robotics and drones, this means decisions about compute placement aren’t theoretical anymore. Companies have to pick architectures today that will underpin billion-dollar deployments tomorrow. Those choices (between local, cloud, or hybrid designs) are becoming strategic differentiators rather than technical details.

On coordination

Some might argue that true coordination requires centralized control and reliable connectivity. But coordination doesn’t disappear when you move intelligence to the edge. It changes form. Instead of relying on a single centralized brain, agents can run lightweight models locally and share intent, status, or partial plans across the mesh. That way, each node stays resilient if the network falters, but when connectivity is strong, the group can still synchronize at scale. This hybrid approach preserves the benefits of coordination without making the whole system fragile to a single point of failure.

So Where Is Everything Trending?

Here’s how I’d describe the arc:

On-device AI is accelerating as hardware catches up to model complexity. Robotics is leading the charge: platforms like Thor make edge autonomy viable for once unreachable workloads. The more physically interactive, safety-critical, or data-rich the system, the more it tilts toward on-device compute.

Offloading still matters for cost-constrained consumer systems, low-latency isn’t always necessary, and leveraging remote infrastructure allows lighter hardware to shine. Drone Forge and similar approaches fit this mold, pushing heavy AI to the backend.

But the boundary is shifting. Affordable high-performance AI at the edge makes on-device increasingly accessible. Sensor-rich architecture and advanced frameworks drive compute demands upward, making cloud offloading less appealing for real-time tasks.

Tooling and stack maturity blur the line further—software ecosystems now support complex AI models at the edge nearly as easily as in the cloud. Even emerging open-ISA support and edge-optimized model frameworks reinforce this shift.

The future? Hybrid. Systems will increasingly blur on-device and offload compute dynamically. Cars, robots, and drones may run timers and lightweight agents onboard, but offload heavier reasoning or updating when network conditions allow.

In markets, robotics and autonomous industrial applications will lean heavily on on-device, real-time, foundation model compute. Consumer edge devices—drones, toys, smart cameras—will negotiate dynamically between onboard inference and cloud scale.

Ultimately, the trend is toward more capable edge AI, better connectivity, and smarter offload strategies—and as hardware and AI co-evolve, the gap between “on-device” and “off-device” will keep shrinking.